The question haunting our society today isn't whether AI will change how we learn and work, it's whether AI will quietly determine the boundaries of our imagination and the depth of our thinking.

I recently listened to The Free Press debate "Will the Truth Survive Artificial Intelligence?" featuring AI luminaries Fei-Fei Li, Nicholas Carr, and Jaron Lanier. While the conversation touched on crucial concerns about AI's impact on information and learning, I found myself wanting to go deeper into the fundamental question that emerged: Are we using AI as a tool that amplifies human agency, or are we surrendering our creative authority to systems designed to capture attention and profit?

The debate crystallized tensions that anyone using AI must grapple with, but I believe we need to push beyond the surface-level discussions about efficiency and misinformation to examine what's really at stake: Our capacity for independent thought, creative expression, and authentic human development.

The Real Battle: Agency vs. Automation

The debate revealed two fundamentally different visions of AI in education. On one side, Stanford's Fei-Fei Li argued that learning depends on one "magic word": motivation. Whether students use "a rock, a piece of paper, a calculator, or ChatGPT," what matters is "the willingness to learn" and the agency of the learner. AI, in her view, is simply a tool – it's how we choose to use it that determines educational outcomes.

On the other side, author Nicholas Carr warned that AI "undermines learning" at a fundamental level. He argued that students learn by "exercising our brain by working hard to discover answers," through "struggling with difficult texts" and "synthesizing what we've learned in our own mind." When AI removes that struggle, he contends, we get "this illusion of thinking you know something without going through the hard work of actually learning it."

Both perspectives carry truth, but they miss a crucial element: the role of intentional design in shaping how AI enters educational spaces.

The Business Model Problem

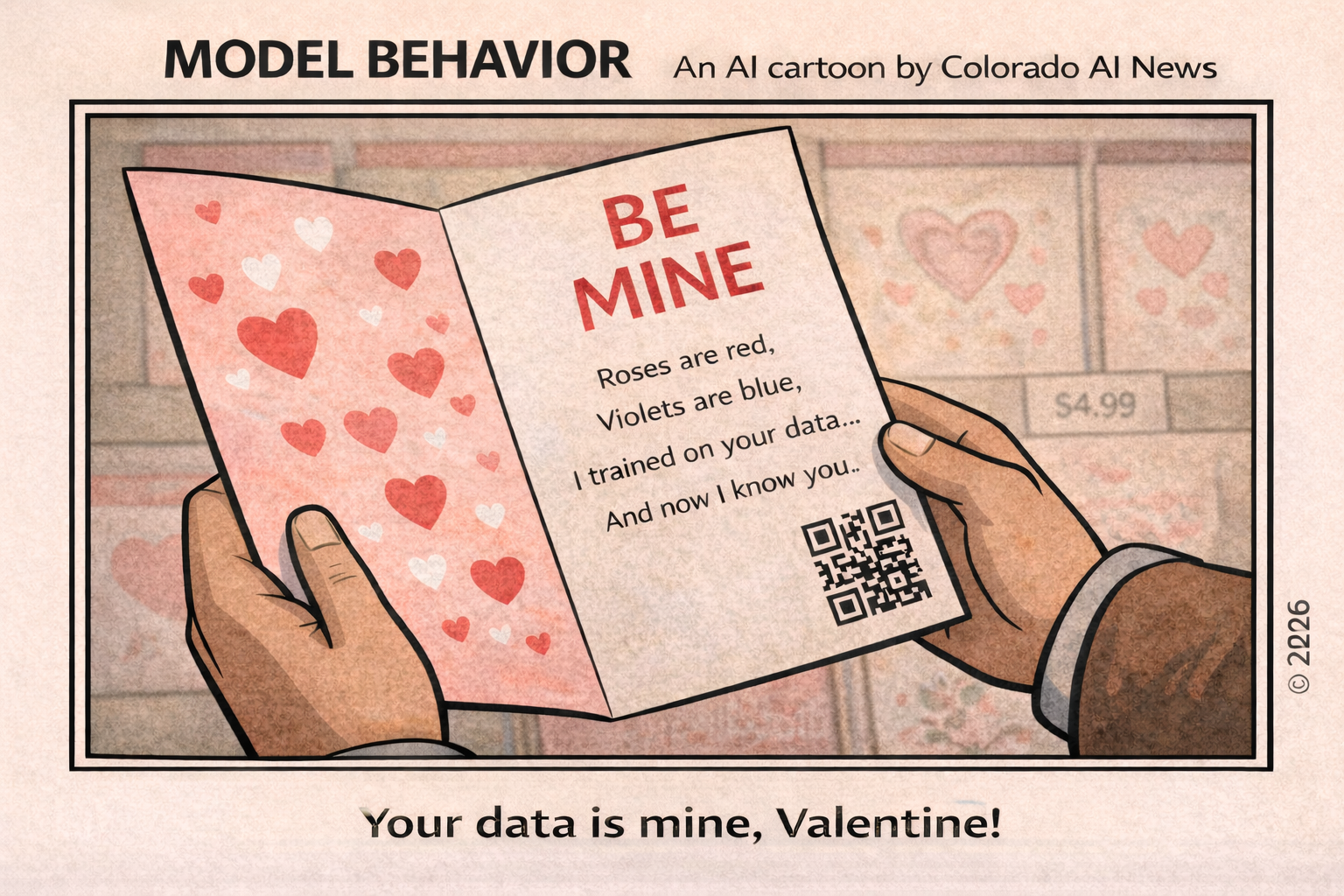

Jaron Lanier's contribution to the debate cuts to the heart of why this matters for women in AI: Silicon Valley's business model is "to get paid by third parties to influence or fool essentially the users."

When OpenAI offers free premium services to students for the first months of the school year, we're not witnessing educational innovation, we're seeing market capture.

This is where anyone committed to responsible AI development has a critical opportunity. As Lanier pointed out, there are no "independent machine values," as machine values are human values. The question becomes: Whose human values are embedded in the AI systems entering our classrooms, workplaces, and daily lives?

A Local Model: Creative Agency in Action

Here in Colorado, we've been exploring what it means to approach AI with intentional "creative agency," the deliberate use of AI as a collaborative partner rather than as a replacement for human thinking.

As I mentioned in my first article for Colorado AI News, "Women in AI Colorado has a vision that seeks to meet the challenges of our AI era by positioning women not as passive recipients of technological change, but as active architects of our AI-powered future." Our community has been developing principles that apply whether someone is in a classroom, boardroom, or anywhere else that humans and AI intersect.

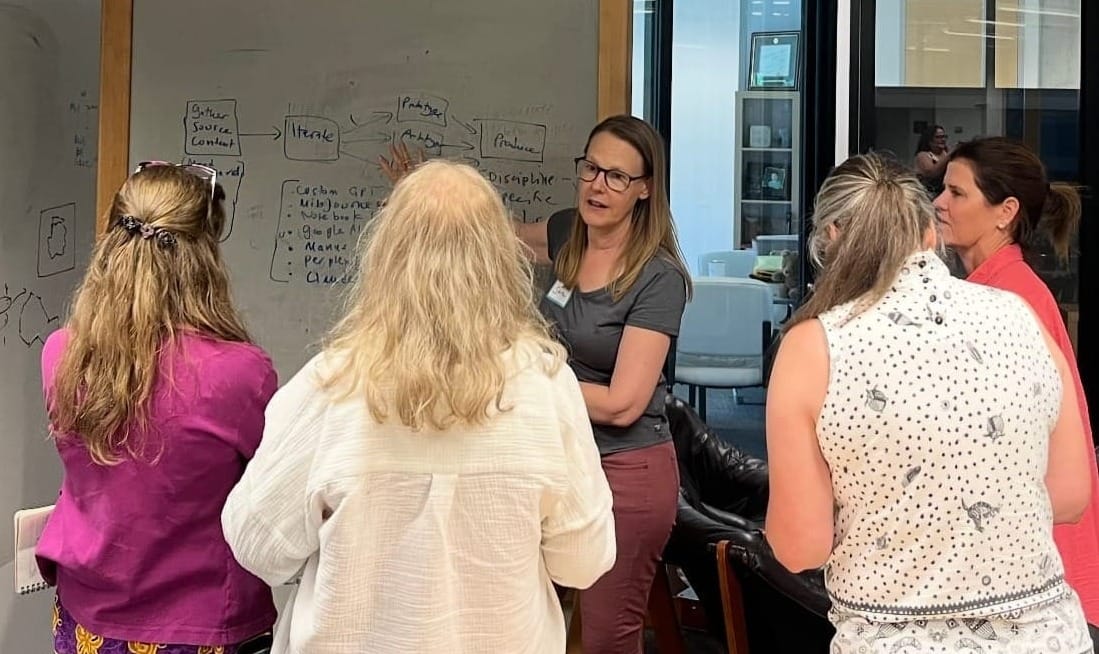

But we're not stopping at principles. We're conducting action-based research through learning labs where 14-20 women form collaborative pods of three or four participants. Each pod commits to weekly AI experiments, but with a crucial difference: rigorous reflection on what works, what doesn't, and how to adapt. This isn't just AI adoption; it's developing a library of human-AI interaction patterns that could transform how society approaches these powerful tools.

Our hypothesis is simple but revolutionary: Individuals must engage their creative agency to reclaim intentional design choices in how AI shapes our work and thinking. Through systematic experimentation and reflection, we're uncovering a methodology that captures how people actually adopt AI, learn with AI, and transform their work through AI partnerships, on their own terms.

What we're discovering challenges conventional AI training approaches. Some participants need extensive modeling before experimenting, while others dive in immediately. We're identifying what we call "curiosity capacity," a threshold that determines how people engage with AI tools. Rather than one-size-fits-all methodologies, we're developing approaches that meet people where they are and foster the curiosity that drives meaningful AI partnerships.

These initial findings reveal three key principles that apply across contexts:

Design for Dignity: When we use AI in learning environments, we must ask not only, "Does this work?" but also, "Does this honor the learner's intellectual development?" AI summaries might deliver information efficiently, but as Carr noted, they "remove everything interesting, everything hard, everything difficult, everything subtle, everything nuanced." Our role is to design AI interactions that preserve the struggle that leads to genuine understanding.

Question the Source: Every AI tool carries embedded assumptions about what good work should look like. When an AI writing assistant suggests a structure for student essays or business proposals, we must ask, Whose definition of "good writing" is it reinforcing? How might it be limiting rather than expanding our expressive possibilities?

Prioritize Process Over Product: The most transformative use of AI happens when we focus on how we think with AI, not just what we produce. Whether in educational or professional contexts, this means making the conversation between human and machine visible, justifying our prompts, critiquing AI outputs, and synthesizing our own insights.

What Educators Can Do Now

The path forward requires what Fei-Fei Li called developing "agency," but this doesn't happen automatically. It requires intentional design in whatever context we find ourselves:

- Reimagine Assessment in Education: Move beyond fact-based assessments to complex, evaluative tasks that require students to use AI as a research partner while demonstrating their own critical thinking. The goal isn't to eliminate AI but to make human reasoning visible within AI-assisted work.

- Teach AI Literacy as Creative Practice: Help students understand AI not as an oracle but as a creative collaborator with biases, limitations, and embedded assumptions. When students learn to critique and redirect AI outputs, they develop both digital literacy and critical thinking skills.

- Model Reflective AI Use: Faculty need space to explore fundamental questions: What is the purpose of education when information is instantly accessible? How do we balance efficiency with deep learning? What aspects of learning should remain distinctly human?

The Stakes for Women in AI

This isn't just an educational challenge, it's a question of who gets to shape the future of human-AI collaboration. Women's underrepresentation in AI development isn't just a diversity problem; it's a design problem. Research consistently shows that diverse teams build more robust, less biased systems, but the value women bring goes beyond representation metrics.

Women in AI often approach technology development with what researchers call "relational thinking", considering not just whether something works, but how it affects relationships, communities, and long-term human wellbeing. This perspective is crucial when designing AI systems that will reshape how humans learn, work, and relate to each other. We're more likely to ask: "What are the second and third-order effects of this technology? Who might be harmed? How does this preserve human dignity?"

Additionally, women frequently bring their lived experience of navigating systems not designed for them. This experience translates into designing AI that works for diverse users rather than defaulting to narrow assumptions. When we've had to advocate for our voices to be heard, we understand the importance of building systems that amplify rather than silence human agency.

Perhaps most critically, women in AI are often motivated by impact beyond profit. We're building AI with questions like: "How does this help people flourish? How does this expand rather than constrain human potential?" This values-driven approach is exactly what's needed as AI becomes more powerful and pervasive.

As Lanier emphasized, we need "data dignity," recognizing and honoring the human sources of AI knowledge. Women's perspectives are essential to ensuring that AI development prioritizes human flourishing over efficiency metrics, and that the most powerful AI partnerships happen when human agency, not automation, drives the relationship.

Moving Forward with Intent

The question facing all of us isn't whether to use AI, as that ship has sailed. The question is whether we'll use AI to expand human potential or constrain it. Will we design learning and working experiences that help people develop their own voices, or will we settle for efficiency tools that gradually erode the very capacities we're trying to develop?

Our learning labs at Women in AI Colorado represent one approach to answering this question through systematic experimentation rather than speculation. As we develop rigorous research methodologies to capture the impact of human-AI collaboration, we're building evidence for what works, not just in theory, but in practice.

The future of truth in an AI age won't be determined by the algorithms themselves. It will be shaped by the researchers, educators, designers, and leaders who choose to prioritize human agency, critical thinking, and authentic development over the convenience of automated solutions.

As Fei-Fei Li concluded: "What AI does to truth is up to us, not AI." The question is: Are we ready to take up that responsibility?

Women in AI Colorado hosts monthly events exploring the intersection of AI, creativity, and human agency, featuring technical showcases where community members share how they're using AI in their workflows and lives, plus facilitated share-out circles for AI experiments and discoveries. Separately, we run six-week learning lab cohorts where participants conduct systematic AI experiments in collaborative pods. Connect with us to join monthly meetings or hear more about our upcoming learning lab cohorts. Contact me directly (susanrows at gmail.com) to explore how we're shaping AI's role in human flourishing.