Today marks World Brain Day, an annual campaign from the World Federation of Neurology aimed at promoting neurological wellness across all ages. For 2025, the spotlight is on “Brain Health for All Ages,” a timely theme as AI steadily closes in on domains once thought exclusively human: cognition, emotion, and reason, to name just a few. But how far can AI go? And what must we be cautious of?

Colorado Enters the Conversation

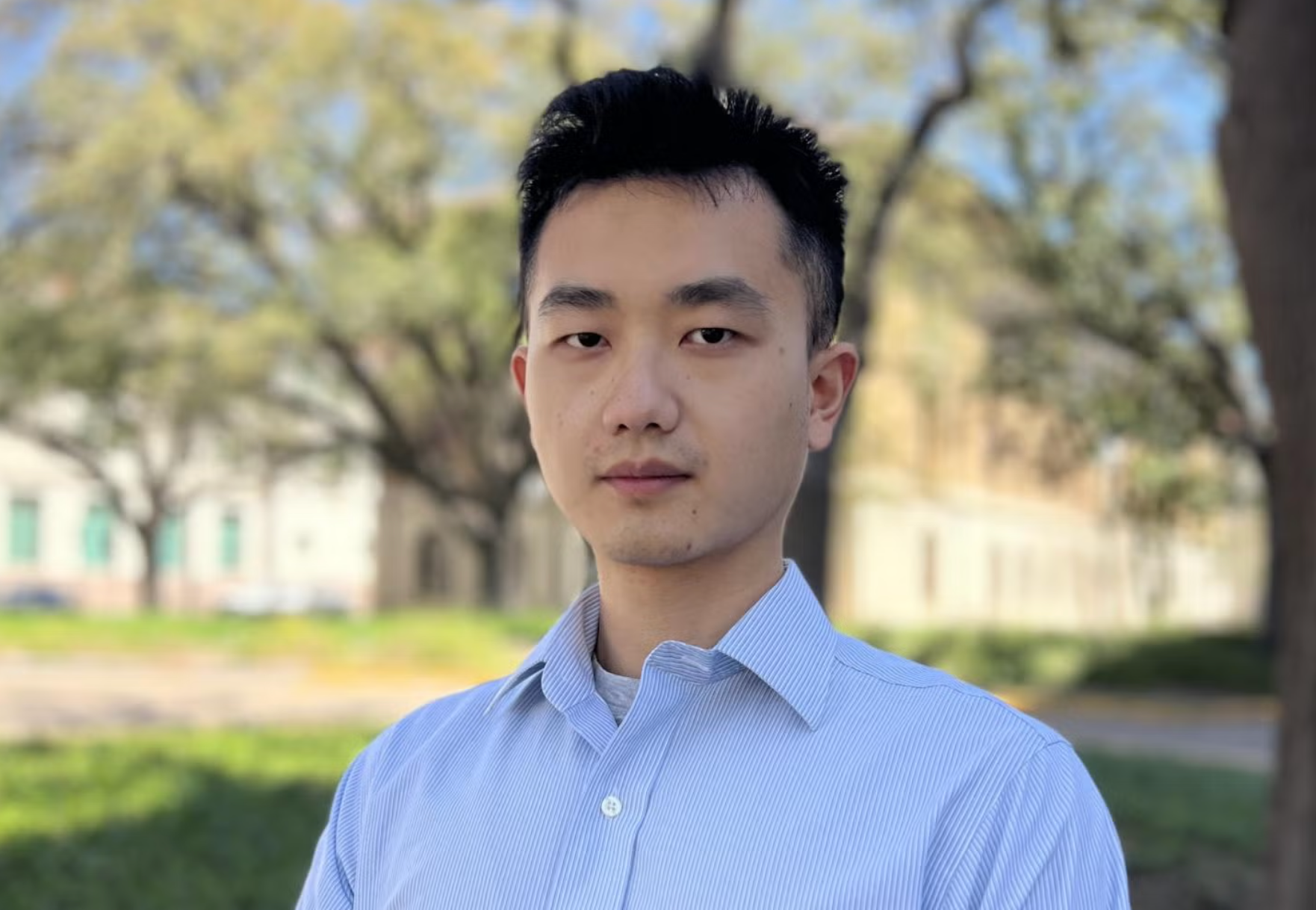

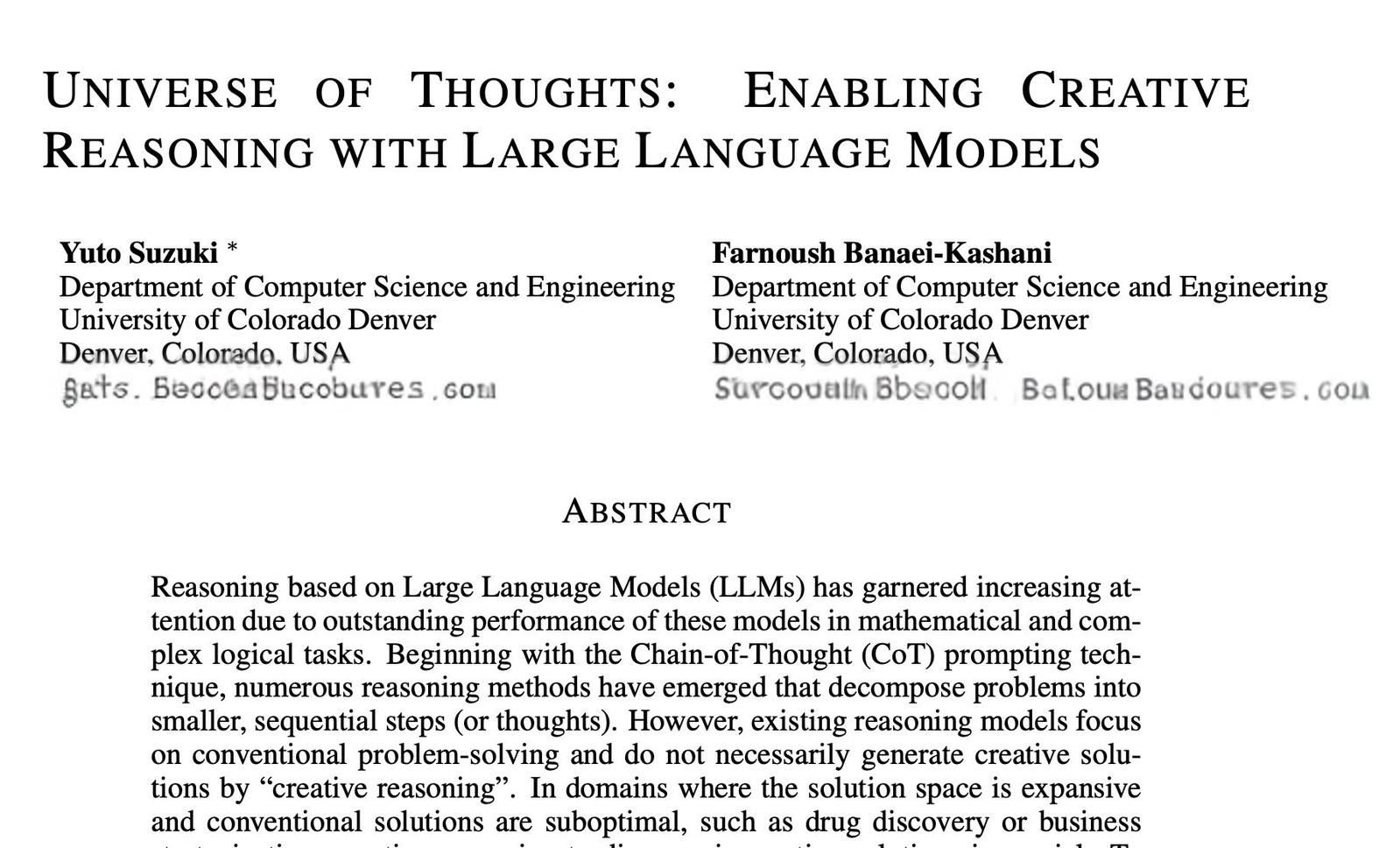

Coloradans are answering those questions head-on. At CU Boulder, Dr. Theodora Chaspari is tackling the tricky intersection of AI, speech, and mental health. Her recent study, published in Frontiers in Digital Health, exposed a major issue: speech-based AI mental-health tools can misfire, especially across gender and racial lines.

As a story in CU Boulder Today describes it, AI, just like people, can make assumptions based on race or gender. Chaspari, an associate professor in the Department of Computer Science, explains, “If AI isn’t trained well, or doesn’t include enough representative data, it can propagate these human or societal biases."

Chaspari continues, “With artificial intelligence, we can identify these fine-grained patterns that humans can’t always perceive. However, while there is this opportunity, there is also a lot of risk.” To this point, AI tools have tended to underdiagnose anxiety in Latino speakers and miss signs of depression in women more often than in men.

In short: the technology may appear precise, but when it’s trained on data that doesn’t represent everyone equally, it can quietly amplify disparities rather than reduce them. What sounds like a clinical breakthrough could actually be a diagnostic setback for many.

CU Anschutz: Turning AI Caution into Action

At the CU Anschutz Medical Campus, the focus is just as much on ethical guardrails as it is on technological advancement. In February, Anschutz hosted its “Engaging with AI” forum, drawing a wide range of faculty and health professionals to discuss how AI is reshaping clinical care – and how to keep it from undermining human judgment.

Matthew DeCamp, MD, PhD, an associate professor who focuses on medical ethics and health technology at CU Anchutz's Center for Bioethics and Humanities, made a point that continues to resonate:

“Sometimes that sense of awe could blind us to what could be missing. We want to be amazed, but we can’t let that blind us to the fact that these tools are just tools. They make mistakes, they’re inaccurate at times, and we have to be vigilant to the potential for that, even as they get better.”

To illustrate, he invoked a classic metaphor: “Give a small boy a hammer, and he will find that everything he encounters needs pounding.” In other words, just because AI is a powerful tool doesn’t mean it’s the right one for every task. And unless clinicians and developers build in checks, the consequences can be damaging – especially in areas like mental health, where human nuance matters most.

Why Humanity Still Wins

AI can parse patterns and crunch data faster than any clinician. It can analyze tone, detect shifts in language, and sift through charts at high speed. But what it lacks is what the human brain does best: moral reasoning, contextual understanding, and empathy.

Both Chaspari and DeCamp point to a shared principle: AI should support – but not fully replace – human expertise. That includes designing systems that are not only accurate but inclusive and explainable. The path forward isn’t to build machines that think like us, but tools that help us think better.

Celebrate, Question, and Connect

On World Brain Day (and shouldn't every day be World Brain Day?), consider this to-do list:

- Talk face-to-face, not just screen-to-screen: AI can amplify connections, but it shouldn’t replace them.

- Support human-in-the-loop systems: Hybrid tools enhance decision-making instead of replacing it.

- Engage your own brain: Go for a hike, do something new, read something difficult, challenge your assumptions. Brains don’t just process – they imagine.

Final Take

Today, just a couple of the many Coloradans studying AI offer a reminder: AI may change how we understand and monitor the brain, but it shouldn’t change how we value it – and we need to be cognizant of AI's many limitations.

If we’re going to build AI tools to serve our minds, we have to bring our whole minds to that task: curiosity, creativity, and care. That’s the real challenge – and the real opportunity.