We’ve all seen the video clip far too many times by now: Andy Byron, then-CEO of Astronomer, embracing Kristin Cabot, the company’s head of HR, at a Coldplay concert. The moment spread like wildfire across social media and the entire web – shared, dissected, and meme-ified in real time.

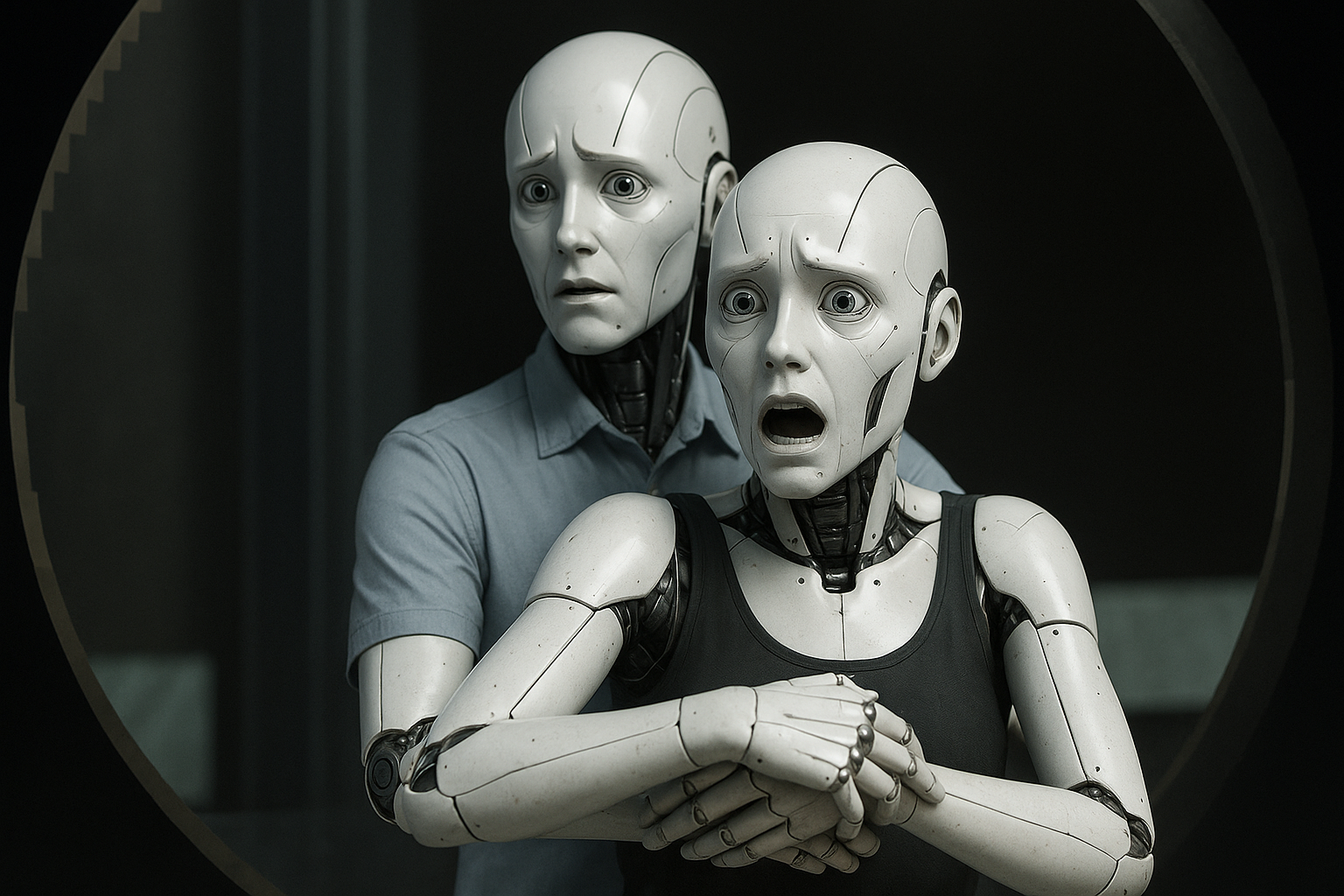

But while the video may have been real, it felt like a preview of a world where reality itself is up for debate. The next scandal might not involve actual behavior – it might be entirely fabricated. A fake confession, a manufactured kiss, maybe a made-up Karen moment.

The technology to do this already exists, of course, but even more important is that it’s in millions and millions of hands right here in the U.S. But our ability to deal with it – legally, ethically, psychologically – isn’t even close to ready.

As I discussed here two weeks ago, "Advances in generative AI have turned photos, videos, voices, and even entire backstories into tools of manipulation. These aren’t just digital curiosities or harmless pranks – they’re undermining truth, trust, and the very foundations of our shared reality." No question: The AI lies have continued to pile up.

The Deepfake Era Is (Almost) Here

Looking back, the 2024 presidential election wasn't the deepfake disaster many anticipated, but that doesn’t mean we’re in the clear. AI tools are advancing rapidly, as the software is getting better, faster, and much more accessible.

In fact, according to TechRadar, deepfake content is expected to jump from 500,000 clips in 2023 to over 8 million this year. And it’s not just state actors or hackers – these tools are becoming playground toys. A teenager with a laptop and a bit of free time can now create synthetic video good enough to fool millions. Looking ahead, it’s likely that the 2026 midterms will be the first truly deepfake-saturated election cycle in U.S. history – but none of us will have to wait that long for the deepfake tsunami.

Ultimately, the deeper danger is not that we’ll be fooled by something fake – it’s that we’ll stop believing anything at all. And we're already going down that path.

The Collapse of Trust

Americans no longer trust their institutions—and haven’t for decades. According to Pew Research, trust in the federal government has plunged from nearly 75% in the late 1960s to 22% today. Gallup shows similar nosedives in confidence across banks, public schools, the judiciary, and especially the media.

Even more troubling: trust has become tribal. One side believes; the other side disbelieves. Axios recently described this as the “new trust gap,” where facts, footage, and institutions themselves are filtered entirely through party loyalty.

In that environment, deepfakes aren’t just a technical threat. They’re a social accelerant. They don’t just confuse; they confirm what people already want to believe.

The Shame & Anger Machine Is Always On

The video of Astronomer's Byron and Cabot went viral because it hit one of our culture’s sweet spots: a powerful political leader or executive brought down by a sex scandal. The reflex wasn’t curiosity – it was outrage, schadenfreude, mockery, and a collective online smirk. For at least 72 hours, everyone in America had something to say - and in this case, the chorus was almost unanimous: "What were you two thinking?"

A similar thing happened following the assassination of the UnitedHealthcare executive on the streets of New York City last year: Video clips from security cameras were shared millions of times before the public even understood exactly what had happened, and why. But what probably shocked social critics the most about all the online attention was the nearly universal anger at the healthcare industry – and by proxy, its executives. In some circles this led to the glorification of the assassin. That's not healthy – for any of us.

Social media isn’t built for nuance, context or caution. It’s built for spectacle. And above all else, it rewards emotional response. That's not a good thing, because nuance is critical to a functional democracy and a civilized society. To make things worse, those same social media platforms in the not-too-distant future will be flooded with viral content that can’t be verified – and far too few people will even care whether it’s real or not.

Deepfakes Won’t Just Fool Us – They’ll Erode Belief

Experts call this erosion of trust the liar’s dividend: Once fake content is common, real content becomes suspect. If a video goes viral and it’s inconvenient, just call it a deepfake. If it’s flattering, insist it’s real. When everything is deniable, nothing is reliable.

And most of us aren’t equipped to tell the difference. Studies show people detect deepfakes only slightly better than chance – and much worse when emotion is involved. Even AI detection tools struggle in the wild, especially with videos shared out of context.

The threat isn’t that we’ll believe something false. It’s that we’ll stop believing anything at all.

Here’s What We’re Losing

Shared truths: In an era of AI-generated media and viral deception, our ability to agree on a common set of facts is crumbling. When each side can claim that damning footage is “just a deepfake” — even if it's real — the very foundation of democratic discourse starts to erode. Without shared truths, debate becomes impossible, and manipulation thrives.

Trust in each other: As fakes become indistinguishable from reality, we risk seeing everyone — colleagues, leaders, even loved ones — through a filter of suspicion. If video evidence can be convincingly forged or conveniently denied, how can we truly believe one another? This loss of interpersonal trust fractures communities and creates a culture of doubt.

Trust in institutions: A democracy’s foundational institutions – such as the media, government, science, and the courts – have all experienced long-term declines in public confidence, and AI threatens to deepen the chasm. When videos or audio recordings can be fabricated with ease, bad actors have a powerful tool to discredit truth-tellers or frame innocents. Every fabricated scandal chips away at the already fragile belief that our institutions serve the public good.

Accountability itself: The paradox is chilling: Real misdeeds might be dismissed as fake, while fake ones might be accepted as real. In both cases, accountability suffers. Those caught on camera may successfully deny reality – while those targeted by fakes may be punished before the truth can catch up.

Our shared reality: It used to be that we disagreed about what things meant. Now we don’t even agree that they even happened. (What more needs to be said?)

What We Can – and Must – Do

Build digital literacy that goes beyond spotting the fake: It’s not enough to teach people how to recognize blurred edges or inconsistent lighting. We need to cultivate a generation of skeptics – people who pause before they share, who question emotionally charged content, and who ask: “What am I being asked to believe? And why now?” Schools, companies, and governments should embed this kind of critical thinking into everything from curricula to mandatory AI training.

Force social platforms to act as responsible gatekeepers: Platforms need to slow down virality when authenticity is in doubt. That means flagging suspect content, limiting algorithmic reach, and embedding provenance data in synthetic media. AI-generated video should carry visible and invisible watermarks by default – not as a nice-to-have, but as a baseline requirement for distribution.

Demand radical transparency from institutions: The only way to rebuild trust is to over-communicate. Government agencies, courts, newsrooms, and companies must show their work, explain decisions, and make raw data accessible. Conspiracies thrive in the shadows, and the best antidote is sunlight.

Enact meaningful legal protections for identity: Your face, your voice, your likeness should belong to you – not to anyone who can scrape a video. Denmark’s proposal to give citizens copyright-like control over their own digital presence is a promising start. Might the U.S. benefit from its own version? It’s an idea worth exploring.

Create rapid-response teams for synthetic reputational attacks: Every organization and public figure needs a playbook for when – not if – they’re targeted by a convincing fake. That includes technical takedown procedures, legal strategy, media coordination, and employee communication. We prepare for data breaches, and we must now prepare for identity breaches.

Final Thought

The video from the Coldplay concert seemed to bring the country together, even if just for a few days. But in the age of synthetic media, that kind of unity may be even more ephemeral, if we see it at all. One side will say, “That didn’t happen.” The other will say, “It’s right there on video.” And neither will budge.

In the not-too-distant future, it may not matter what’s true. It may only matter what people are ready to believe.

We still have time to act. But as Coldplay has been suggesting for quite a while now, we’re facing closing walls and ticking clocks.

Let’s not end up cursing missed opportunities.