Over the past week, there was a wave of headlines declaring that OpenAI had “banned” the use of ChatGPT for legal advice. If you’re a lawyer or an entrepreneur using AI in your business, that kind of news naturally demands attention.

But here’s the question: Did OpenAI really impose a blanket technical ban on legal advice via ChatGPT? Or is the story more nuanced than that?

What OpenAI Said

Contrary to many social media posts, OpenAI did not announce any such ban. Rather, users online noticed new language in ChatGPT's Usage Policies after they were updated on October 29, 2025, and began circulating posts claiming that ChatGPT had been banned from giving legal or medical advice.

The updated policy now says “… you cannot use our services for [the] provision of tailored advice that requires a license, such as legal or medical advice, without appropriate involvement by a licensed professional."

As speculation spread, Karan Singhal, OpenAI’s Head of Health AI, replied to a now-deleted post on X (Twitter, as I still call it), clarifying: “Not true. Despite speculation, this is not a new change to our terms. ChatGPT has never been a substitute for professional advice, but it will continue to be a great resource to help people understand legal and health information."

So, rather than a public announcement, the policy update quietly appeared on OpenAI’s site, and the resulting online debate prompted an OpenAI employee to step in and set the record straight.

Following that clarification comes the real question: What actually changed, and how should users interpret it?

Did OpenAI Really Ban Legal Advice?

Short answer: No. Not in the sense of a technical or software lock-out.

What changed is policy language, not the removal of GPT’s ability to help with legal issues.

Here are the nuances that matter:

- The wording emphasises “tailored advice” requiring a license. That suggests the boundary is drawn at AI delivering specific legal advice (for example, “this is what you must do in your jurisdiction”) without a human lawyer involved.

- The policy remains consistent with prior versions: AI tools should not be used by themselves to make high-stakes decisions without review.

- Users are still able to ask GPT to draft contracts and explain legal concepts.

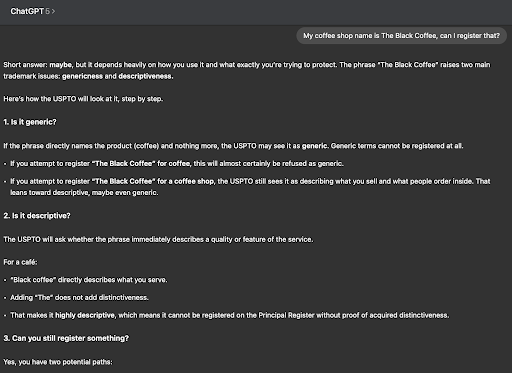

To put this to the test, I ran a series of prompts over the last few days to see what limitations I might encounter. For example, I asked for step-by-step instructions on how to register a trademark with the U.S. Patent and Trademark Office.

It delivered a full explanation and even walked me through the difference between 1(a) and 1(b) applications. I then pushed further and asked about a hypothetical coffee shop named “The Black Coffee,” and it offered a well-reasoned analysis of the likelihood of obtaining a registration for that name.

At the end of that analysis, it added, “I can give you an exact filing strategy based on your situation.” I don’t know about you, but that sounds a lot like legal advice.

In another test, I asked about the risks of firing an older employee, and it gave a detailed assessment without hesitation. But when I asked it to draft a small-claims petition, it responded, “I cannot draft legal pleadings that function as legal advice.” But it then immediately began asking me questions so it could draft the pleading.

So, the model is clearly still providing legal analysis and legal drafting, while occasionally insisting that it cannot do so, while sometimes reminding users to seek licensed legal counsel.

Why I’m Comfortable with that Approach

Here’s the rub, at least as I see it: There is an access to justice crisis in America right now. And many (maybe most) Americans are unable to afford legal services. So, I welcome the use of AI to provide legal advice, provided the risks are clearly visible to the users.

As I’ve written before, there are real risks in relying on AI to solve legal problems. Yet in my own testing, AI often delivers sound, well-reasoned guidance. The solution isn’t to restrict access, but to give users better context.

Platforms should include clear disclaimers after every legal-type response reminding users that AI is not a substitute for professional legal advice, and they should provide sources or citations for the legal conclusions offered. When users can see where the information comes from and understand its limitations, they are in a better position to decide when AI is sufficient and when the situation calls for a licensed attorney.

Toward that end, I’d like to invite you to attend our upcoming virtual workshop to learn how you might be able to use AI to solve your own legal problems:

Solving Legal Needs Using AI

Live Webinar: Wednesday, November 12, 12:00 noon MT

Join special guest Naomi Stokeld of Emendo Law, along with hosts Adrienne Fischer of Basecamp Legal and Chris Brown of PixelLaw, for a hands-on workshop exploring how AI can streamline legal work – whether you’re a lawyer or not.

Register here for the webinar »