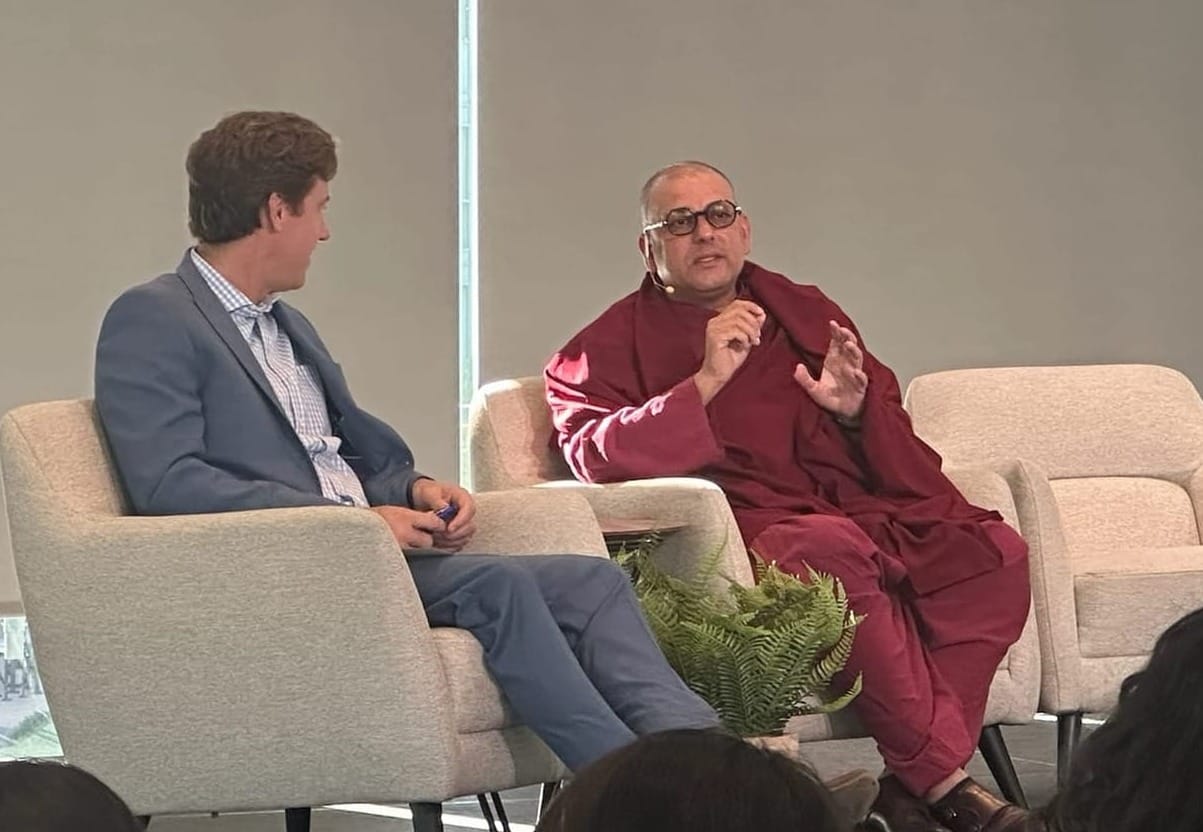

This was not your average, everyday tech talk. It was a fireside chat featuring Venerable Tenzin Priyadarshi and Denver Mayor Mike Johnston, who introduced him as “a good friend of mine” before proceeding to engage him in a forthright discussion about AI and ethics, and how we as humans might gently rewire ourselves.

What the audience heard last week was a grounded conversation about how cities and companies should build with AI – but even more fundamentally, how we as humans need to reevaluate how we think about ethics, empathy, and trust. And throughout the discussion, Venerable Tenzin provided solid ideas on what each of us can do to make the world just a little bit better.

Who is Venerable Tenzin? A fascinating individual, for starters. He entered a Buddhist monastery at the age of 10, later studied at Harvard, and now leads the Dalai Lama Center for Ethics and Transformative Values at MIT. He’s a "philosopher, educator, and polymath monk" who has also spent years around engineers, entrepreneurs, and policymakers. His book, Running Toward Mystery: The Adventure of an Unconventional Life, has been described as an honest, enthralling, and elegant memoir about guidance, uncertainty, and the teachers who shaped him – a story about searching and remaining curious while doing real work in the world.

Venerable Tenzin's Dalai Lama Center is not a traditional think tank; in fact, he calls it a “think-and-do tank.” That phrasing matters. It’s an ever-so-subtle jab at research that never leaves the page. His Center turns ethics into practice – building curricula, running decision labs, and prototyping tools that teams can drop into product roadmaps and public-service pilots. In short, he's as comfortable in labs and city halls as he is in monasteries.

Johnston opened with the right prompt for an AI-focused audience: As we adopt AI to deliver better services, how do we stay connected to our humanity? Venerable Tenzin answered by focusing on the following three main ideas.

What if 'ethical imagination' is what we need?

For the last 15 years, I've been mostly focused on trying to come up with useful, innovative methods of ethical imagination, or promoting ethical imagination, because I realized that the traditional methods of teaching ethics were not getting very far. And that was one of the sort of audacious challenges I took upon myself.

Venerable Tenzin went on to say that the reason he got into ethical thinking around technology is because he was surrounded for years by some who believed that all the world's problems would be solved by just designing new technologies.

And one of the things that I quickly realized is that, like most good engineering issues, it's all about how you frame the challenge, how you frame the problem. And it does turn out at times that we are designing a lot of solutions without framing the problems properly. So we don't know what the solutions are actually for ... and in doing so, we actually end up creating a lot more new problems.

He pointed to social media as the cautionary tale: Early warnings about the mental health of our country's youth were easy for many to ignore, at least until the bill came due some years later.

The still-unfolding lesson of social media

Johnston asked for guidance that students, parents, and builders could use regarding social media: How do you engage without getting twisted up? Venerable Tenzin described a pattern that many in the room likely recognized:

[You're having] a great time. You post some photos, and every 10 minutes you’re waiting to see, how many thumbs up do you get? 15 minutes go by… You start self-doubting… The designers of these algorithms… manipulate this algorithm of validation… to the point that it starts creating issues about self image… So the more time you spend on it… it’s actually messing up with your behavioral algorithm.

Johnston connected the dots: “You don’t just lose the affirmation. You lose maybe the purity of the experience that was once –”

“Joyful, right?” Venerable Tenzin finished the mayor's thought.

Another practical point from Venerable Tenzin: “You’re not in the experience because you’re so concerned about how to record that experience to be able to share with everybody else.”

Treat empathy like infrastructure

Next, Venerable Tenzin addressed the issue of empathy – or the lack thereof – as more than just a triviality: “Lack of empathy is a public health issue. Not a nicety. A public health issue. Compassion is a game-changer – it supports resilience, problem-solving, and the kind of society you actually want to live in.”

He went on to talk about small cues that make kindness easier to do and feel, because emotion spreads:

Human emotion is contagious, highly contagious. You cannot hide it. If you are a kinder human being, that kind of contagion phenomena is much more useful, much more beneficial for society, and it’s much needed.

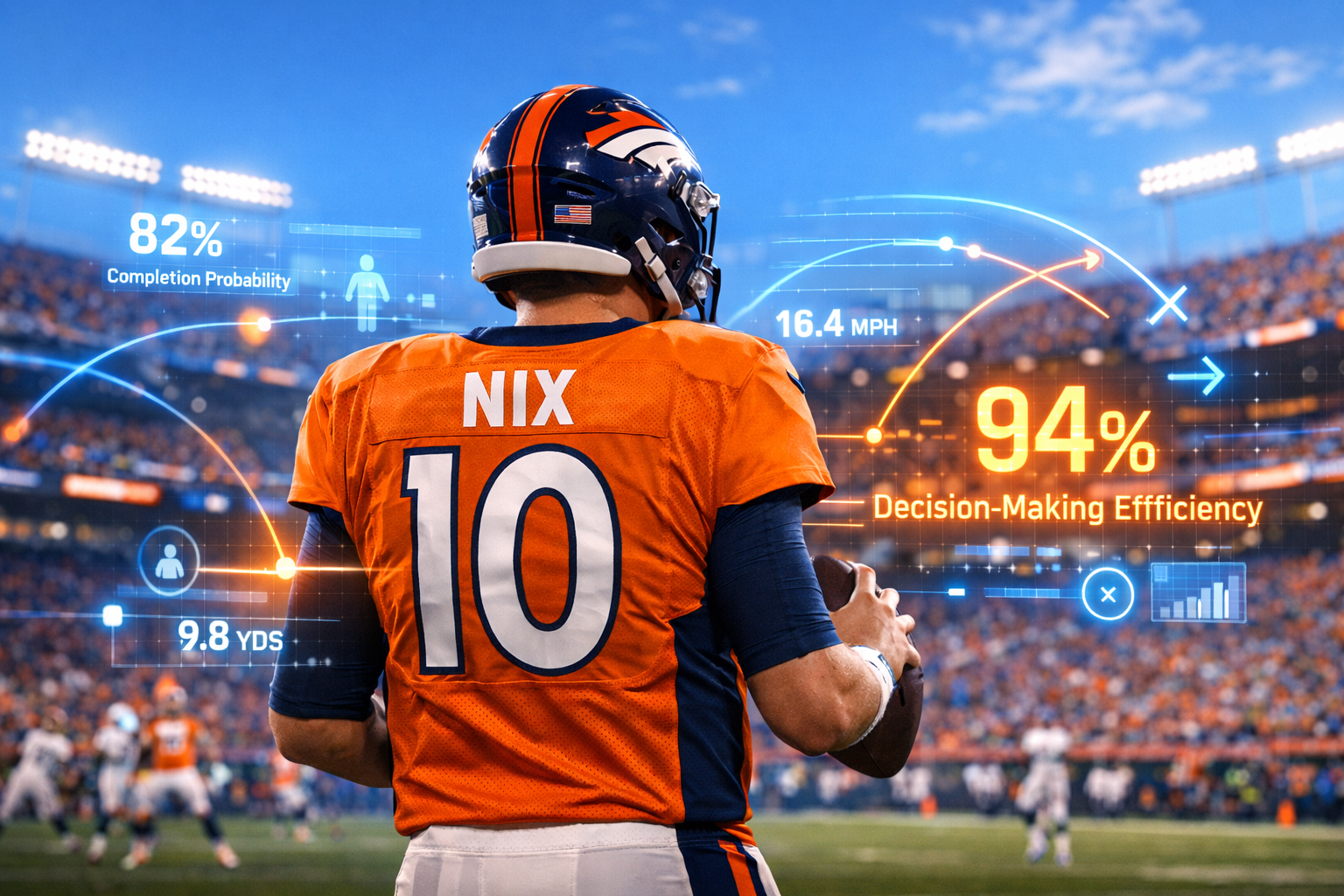

Rebuild trust by centering care and co-design

Governments are often 10 to 15 years behind technology. Cities can end up as consumers of these technologies… Most technologies are not designed with care for people as the first thing in their mind. And so as city leaders… you have to bring that to the forefront: How does it really benefit my people… in the short and long term?

Venerable Tenzin sees a practical role for AI in earning trust, but only if it’s used to listen and adapt:

The neat thing about AI systems is that you can get real time feedback. You can create very fast social movements… very quickly, you recognize what people would like or not like… where they are divided… These are mechanisms that government institutions can utilize to build civic trust.

Bring people in as co-designers, he added; don’t rely on long surveys. Frame a few good questions, deploy, learn, adjust.

Speaking of surveys, Venerable Tenzin showed his strong sense of humor when he offered this advice:

Don’t send out a 50-questionnaire survey. Do not do that. I often joke with my friends in Bhutan who, sort of, created the genesis of Gross National Happiness. And I jokingly tell them that, you know, if it’s a 200 questionnaire, by question 20, their happiness level is going down, right? [Ultimately], the survey doesn’t tell us much.

Through it all, Johnston kept the conversation grounded in city service: How do we provide better outcomes without reducing residents to case numbers?

The broader message to builders was plain: If your product reshapes attention, dignity, or self-image, that’s not a side effect – that's a problem, and you should not ignore it. You should measure it, design around it, improve upon it.

Two supporting ideas that deserve attention

First, there's bias literacy. According to Venerable Tenzin,

The presumption… is that humans are biased regardless of training… This should be part of the education system… There could be AI educational tools that help humans learn about their own biases – to detect them and reprogram them… At scale… these tools may do better… simply because of reach.

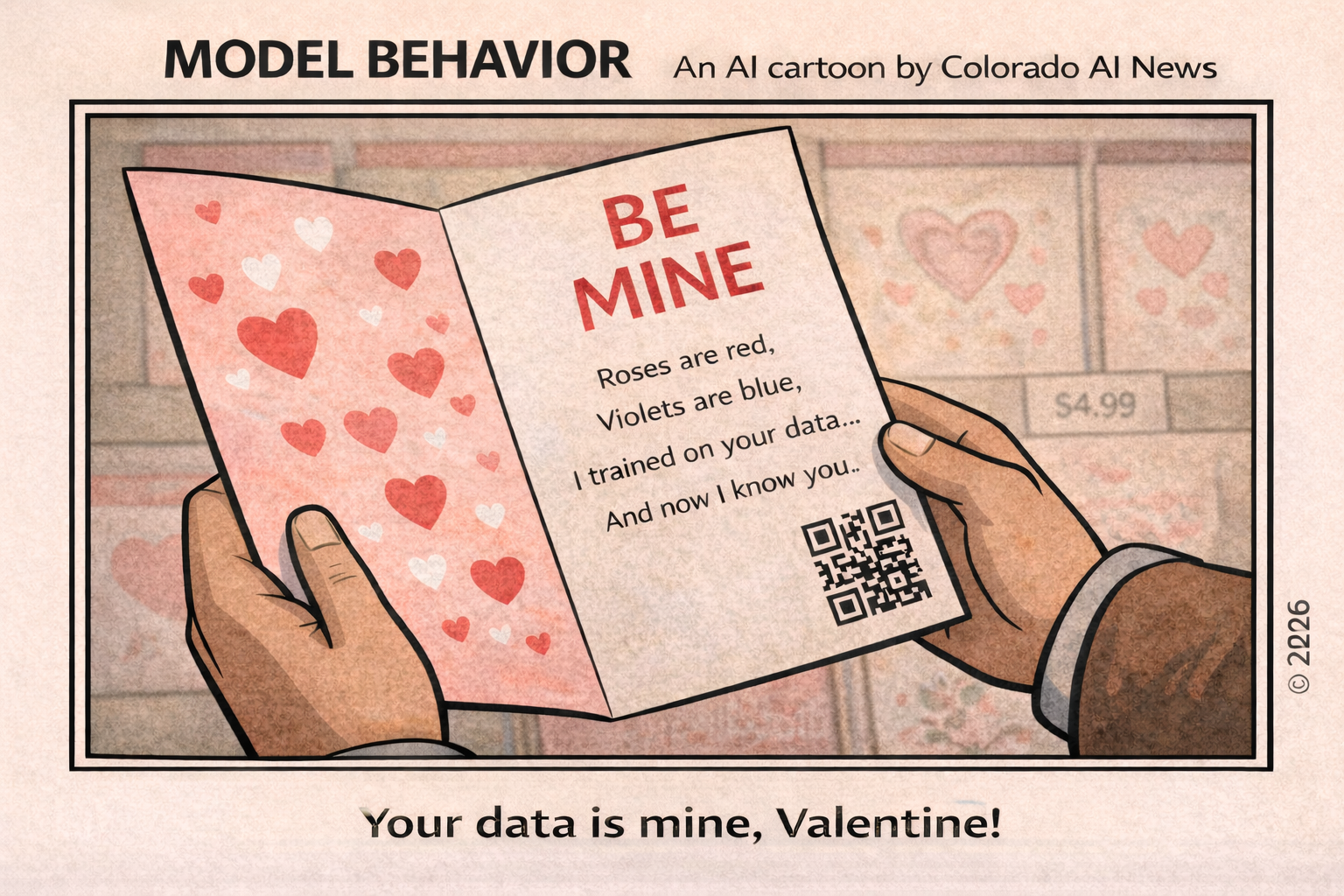

And there's the idea of dignity as the anchor for privacy:

[Different] generations treat privacy very differently… But there are things like human dignity – that’s something that most age groups, most people would agree on, that it’s a good idea to protect human dignity.

Venerable Tenzin also noted, briefly, that compute has a real environmental cost and that leaders should keep energy use visible in plans and procurement. On culture, he pointed to leaders who publicly name contributors as a simple way to encourage shared ownership: "Understand that the more credit you give, the more shared encouragement you promote in society. You empower people by giving them this sense of credit."

Self-righteousness is easy...and hope is on us

Maintain curiosity and openness… Don’t always think that a critique of your opinion is a critique of your identity… Prefer discernment over judgment. Self-righteousness is easy; being ethical is much more challenging.

Building upon that point, Venerable Tenzin also offered one critical, final lesson for everyone: On the days when nothing appears to give us hope, that means it's on us to bring hope into the world and keep building institutions that last.

For Denver, other cities, and by the way, all types of organizations, the guidance is straightforward: If a tool makes it easier for people to be seen, heard, and helped, it deserves serious consideration. If it trades dignity for speed, take a pass. And finally, put ethics, empathy, and care at the beginning of the work, not bolted on at the end. That's the ethical mandate.