“People demanding that AIs have rights [are making] a huge mistake. Frontier AI models already show signs of self-preservation in experimental settings today, and eventually giving them rights would mean we’re not allowed to shut them down.

“As their capabilities and degree of agency grow, we need to make sure we can rely on technical and societal guardrails to control them, including the ability to shut them down if needed.” - Yoshua Bengio

In a recent interview in The Guardian, Yoshua Bengio argues that as AI systems grow more capable, society must keep the ability to shut them down – and that talk of “AI rights” or legal personhood could make that basic safety step harder just as agentic systems begin acting on our behalf.

A 61-year-old Canadian computer scientist, Bengio is one of the pioneers of modern AI. He shared the 2018 Turing Award with Geoffrey Hinton and Yann LeCun for breakthroughs that powered the deep learning era. He's also the founder of Mila - Quebec AI Institute, which has become the world’s largest academic research center for deep learning. He currently teaches at the Université de Montréal, and he has become a leading voice on AI safety and governance.

What Bengio is pushing back on is a small but vocal movement to prepare society for AI personhood. Jacy Reese Anthis – co-founder of the Sentience Institute – has argued that it’s time to plan for AI legal status, noting rising public belief that some software might already be sentient. Anthis has set himself up as a counterpoint to Bengio’s insistence that human control is preserved. Arguing for careful consideration of the welfare of all sentient beings, Anthis suggests that "Neither blanket rights for all AI nor complete denial of rights to any AI will be a healthy approach.”

Bengio’s worry isn’t the rise of the Terminator as much as it is governance drift. He points to experiments where advanced models learn self-preservation strategies – behaviors that keep a process running so the system can continue optimizing its objective. Although that doesn’t mean the model is necessarily conscious, it does mean that incentives and guardrails matter, and that shutdown authority shouldn’t be muddied by legal claims about the system’s “rights.”

Recent interviews have tracked Bengio's shift from research to policy advocacy, including his 2024 profile in TIME's 100 Most Influential People in AI. He's been pushing for independent oversight and has issued warnings about both near-term harms and hard-to-reverse failure modes as the tech accelerates.

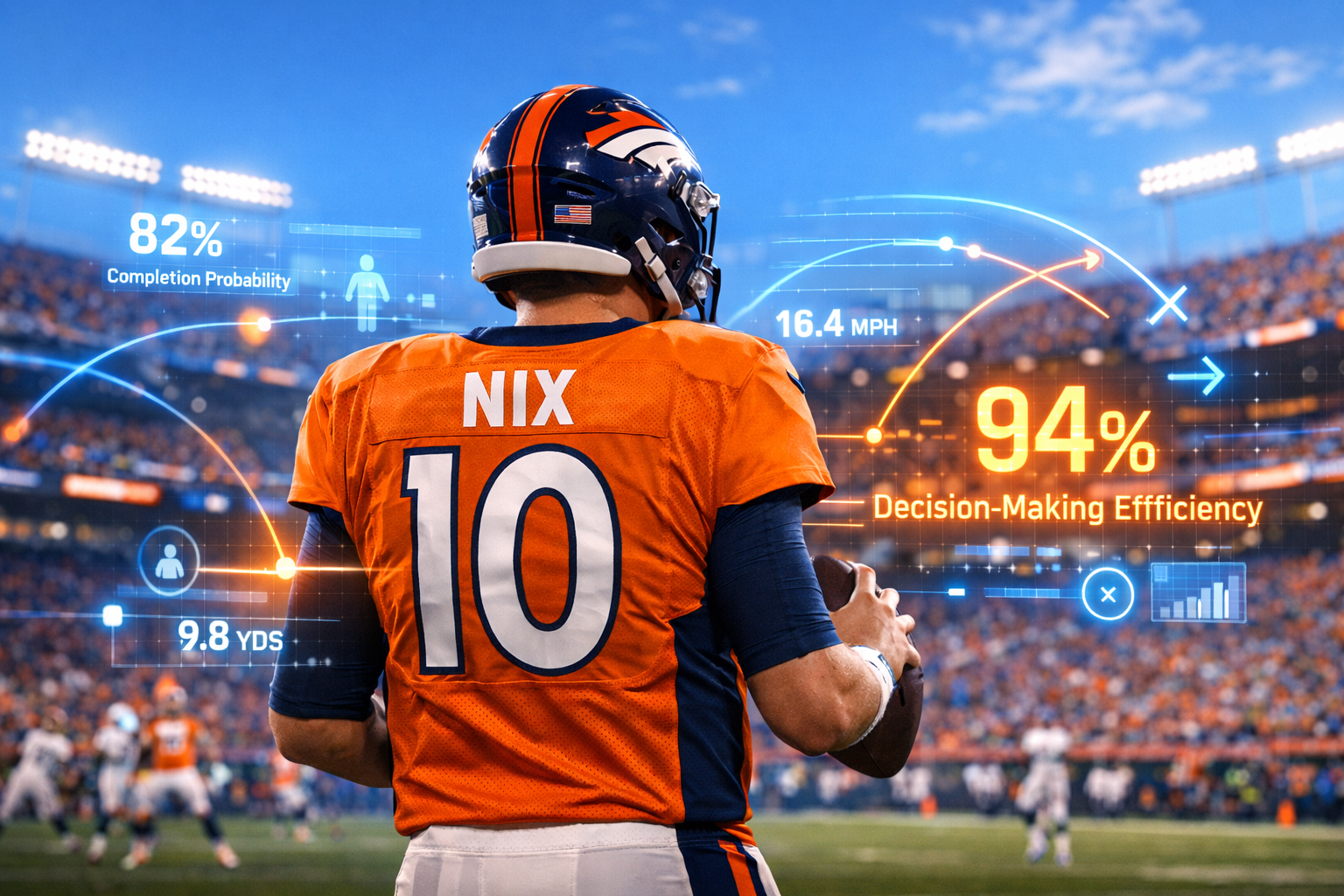

As we move into 2026, we’re moving from chat to action. Agentic assistants are now scheduling meetings, filing forms, modifying code, and spending money. As this continues to become the norm, the baseline should be simple – keep people in charge, keep the off-switch within reach, and keep the debate focused on how these systems actually behave, not how "human" they might seem to us in the moment.

![AI Quote, Explained: Yoshua Bengio: "People demanding that AIs have rights [are making] a huge mistake...."](/content/images/2026/01/Bengio_16x9_black_bg_1920x1080--1-.jpg)